AI, Big data, Automation,

|

|

Several years ago I ran a few large online publishing groups. At the time we tries hard to provide high quality user content and real value for audiences but I felt like lower quality, volume providers seemed to do a lot better.

You will always have people who try to work the system. But lately I have seen a rapid rise in sites with real user focused content. This is especially true of legitimate review sites and sites with product information and buying guides. Mattress Junkie - reviews of mattresses and linens The Coffee Insider - product information and guides for espresso and coffee machines Modern Biker - research and original reviews for urban users of electric bikes and scooters Ugly Christmas Sweater Ideas - ugly sweater product reviews, buyer guides and related content. Swarm technology has lots of applications. Not the least of which is military. In Syria, a Russian airbase was attacked by a coordinated swarm attack. Swarms will revolutionize many areas and add tremendous value but we also need to prepare for the worst. Read the news story here.

“Career Is Over.” That is what people jokingly said CIO stood for when I took my first CIO position years ago. The CIO role has changed a lot, its profile and impact have never been higher but as I wrote this week on CIO.com, the job is becoming about eliminating other people’s jobs. For the first few decades, the CIO role focused on empowering employees to be more productive. With the internet, that continued but the focus started to shift to making customers more self-reliant. Today the role is focused on automation potential that has been unlocked by machine learning, big data, analytics, IoT, robotics and other technologies. AI is the new frontier in technology development and it goes together with job destruction or replacement. The September report by PwC, ‘Workforce for the Future’ showed that typical ladder-climbing careers fields were on the decline. The culprits, robots and AI. I also read in a study that was conducted by Oxford University, that 47% of all jobs in the US would be replaced by robots within the next two decades. In a relatively few number of years we have seen AI learned to play chess, master instruments, create art, drive cars and even write code. But why did they get so smart suddenly? Moore’s law states that hardware doubles in power every couple of years and this growth is exponential. Practical AI applications were unimpressive during the first couple of decades, but by 2025, we will see tremendous improvement. A mere two decades after that, we will witness the first human-level AI. Celebrities like Elon Musk and Bill Gates are expressing alarm or machine learning news coverage that references Skynet from the Terminator films. CIOs need to frame automation investments in such a way that it sounds less hostile than what it really is. For innovative technologies to develop, and in particular AI, jobs need to be destroyed to free up resources and make way for new development. CIOs will need to lead here. Automation can be transformative for organizations that take the lead and disastrous for those who choose to follow. Major executives will get on board because automation will solve problems they never thought possible and unlock massive economic value. Gartner estimates that AI will create over $2.5 trillion USD in economic value few years. The cost of this progress, unfortunately, will be dire. An Oxford-Yale survey hinted at a disappearance of all routine-type jobs by 2030. By 2060 most jobs will have been impacted by automation. It might sound like something out of a movie, but the stark reality is that it is coming, whether we like it or not. My latest article on CIO.com is about how machine learning can be a scalable solution for cleaning and standardizing data. There is massive, untapped, enterprise value being lost because so much data isn't is a state that makes it useful for your reporting, BI and analytics tools.

Standard approaches to fix data and to prepare it for enterprise projects are unscalable and often devolve into brute force, surprisingly manual fixes. This is where machine learning can be a huge lifesaver. Because algorithms learn with the more data you throw at them, machine learning can be a scalable, cost effective solution to start really utilizing your data. To read more please read my CIO.com article. I actually love this debate and I understand the arguments against creating a c-level position for an AI leader in your company.

IT should be all under one roof AI is a tool/ product /programming approach. Why that and not a chief of REST, blockchain, data or automation? You couldn't draw organizational lines that make sense with the CIO There are plenty of other ones and many do point out legitimate areas of concern that would need to be addressed. But as I said in my CIO.com op-ed, I think the strategic impact of AI warrants the work. And That Is About To Get Scarier Artificial intelligence already decides who you are. Your reputation online influences whether people date you, hire you, buy from you, rent to you, loan you money, admit you to their school and much more. Many of these decisions involve subjective and highly personal calculations. It will impact lives directly and in deeply personal ways. New AI will determine your reputation and other AI algorithms will learn about you from that information and make decisions about you. This means that a lot about our lives will depend upon competing versions of interconnected AI, each of which reflects the professional interests and biases of the AI owners and developers. These interests are focused on maximizing the AI owners benefits and that can be at odds with your pursuit of happiness. Google is one of the top AI researchers and has used it to improve search results for a few years. Their RankBrain algorithm evaluates new content about you. This allows Google to deliver more relevant search results than traditional techniques like keyword analysis and website link scoring. Two thirds of people trust online searches for research more than any other source for things like purchasing decisions, job candidates and dating. Google optimizes search results to maximize users’ clicks. This is because Google interprets that as reflecting what searchers find relevant. But that doesn’t necessary align with what people want others to see about themselves. A doctor may have delivered a thousand babies and spend every weekend scooping soup at a homeless shelter. But, if a news article about her DUI arrest from 1999 gets the most clicks then that is what Google will display first for her name. Google AutoComplete, which makes suggestions that pop up as you type in the search bar, flavors opinions even when it doesn’t reflect actual content. Search activity determines those suggestions. Soon predictive algorithms will determine what is relevant about you and make suggestions on its own. That shift is important. It moves us from recommending things based on human activity to AI determining what will be or what should be. Dating sites will be heavy users of AI. Tinder chairman Sean Rad announced their plans to use artificial intelligence to help reduce the noise and effort of searching profiles. This means that Tinder will influence even more who its members see and therefore date. The next step is to predict whether you are looking for casual hookups or serious relationships at a given time of day and week or stage of your life. If you think your mother telling you it’s time to settle down is intrusive, imagine Tinder telling you that it thinks you are ready to settle down so it found a match for you. Recruiting is a valuable but labor-intensive and error-prone field. Jobs are vacant an average of one to two months and a bad hire can cost a company more than three months’ salary in losses. Most recruiters say finding the best candidates in a big database is the largest part of their job. Applicant tracking systems go beyond keywords to predict who will make the best candidate. Karen.ai is a cognitive recruiting chatbot that develops personality insights from interactions with applicants. Recruiting companies get paid when a candidate is hired. So, much like dating apps, they are focused on maximizing successful matches by predicting who the hiring managers will hire. Corporate recruiting platforms share that but also have incentive to predict job performance. In either case, these platforms are not there to find you a job or maximize your opportunities. So, if your profile doesn’t appeal to AI you are just not going to find work. Online review sites are starting to use AI to improve the quality of customer reviews, which are prone to mischief and errors. Businesses with predominantly one or two-star reviews lose as much as 90% of sales prospects. As more people join the gig economy, reviews and ranking features of freelancer platforms and peer-review sites will determine a lot of hiring decisions. AI will interpret reviewer intent and motivation to weed out inaccurate or malicious reviews and improve reliability.. The healthcare industry is a major consumer of AI and analytics to improve care and to reduce complexity and cost. Companies are already using AI to predict medical conditions based on patient medical records, their behaviors and symptoms. Some are providing direct feedback to patients in real time to influence behaviors. Here AI arguably may resemble “big mother” more than “big brother.” Car insurance companies experimented with tracking devices in cars to reward safe driving. A next step in healthcare would be analyzing Facebook or Snapchat pictures and posts to look for weight gain, risky pastimes or signs of depression. In the justice system, prosecutors and defendants are not looking for justice, per se. Prosecutors are looking for successful prosecutions and defendants are looking for exoneration. Prosecutor AI will help them determine strategy for each case. So, what if the AI says that a rich, white suspect is more likely to reject a plea bargain, put up a rigorous defense and be exonerated than a younger, poorer minority suspect? AI will also inform parole decisions which focus on reducing recidivism, so biases matter a lot. Of all the discussion of artificial intelligence and automation, how it determines things about us gets surprisingly little coverage. This may be a sign that we are still not ready to face our deepest fears of AI. Job automation may feel inevitable because of technology but it isn’t personal. If AI replaces your driver or even your doctor, we trust it is be because the data proves it is better for us. A passport photo screening system rejected a picture of an applicant of east Asian descent because it interpreted his eyes as closed. Google Image search tagged at least one photo of an African American in a highly offensive way. It is wrong to write these off as bugs in early versions of technology. They reflect an important truth that, like children, AI pick up the biases of their parents. The argument that AI will eliminate biases in making decision about people misses a critical point. The owners and developers of AI will eliminate biases if there is a benefit to make that a priority. AI supercharges decisions about people and that means some biases could be magnified instead of eliminated. Reputation is different than other optimizations. It is both quantitative and qualitative and your reputation and the decisions it influences are deeply personal. There is great opportunity for misalignment between your interests and those designed into the AI algorithm. Special care should be put into AI that influences our reputations or makes subjective decisions about us. We Need To Talk More About Vision and General AI I have written before that the AI industry's own PR is hurting the industry. We need to really start talking about the vision for AI. There is too much talk about the challenges like privacy how we manage the shift in jobs that will happen and not enough about the positive effects. In short, there really isn't a vision that is being shared and no chief evangelist for the industry so the most reasonable, well informed voices are not getting through. The jobs topic is bad enough, we can't just say that tens of millions of jobs will be destroyed and not map out a vision for the future. But this talk about artificial intelligence spawning the Singularity and it killing us really needs to stop. Most current "AI" related technologies including machine learning, speech, facial and image recognition, virtual assistants, decision management, etc. really have nothing to do with general intelligence anyway. People need to understand that correlating most commercial AI products with Skynet isn't any more accurate than Tron is a reflection of how computers work. Of course, we need safeguards. But most AI tends to be more like innocent children not Cylons. These technologies will be tools in our most critical systems and processes, touching everything from food and medicine to transportation and defense so we need to be careful, the same with any automation related technology. But we cannot permit the image of evil intent to go unchallenged because prejudice is a tool used by those who want to do nefarious things. I wanted to write a quick primer you could send a non-technical executive that would allow them to quickly understand some popular terms, applications and the key vendors associated with each.

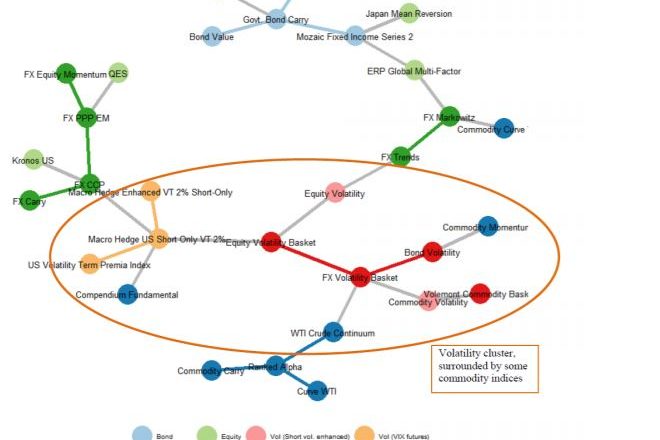

If you are trying to move any AI related initiative forward you need to get the business on your side. So create some simple summaries for executives in your organization to understand what can be done and what partners and vendors you need to know about.  I admit to mostly enjoy looking forward. Looking back can be cringe-worthy - parachute pants and Vanilla Ice and so forth. Like a lot of people I got into tech because I prefer to think about what can be done versus what was done. So it was a total accident when I stumbled upon this Advertising Age article that used a few quotes from an interview that I did in 2003. At the time I was pretty focused on using data analytics to do a better job of online marketing. A lot of what is second nature and obvious today was still new or even future tech then. Stone Age Analytics - 2003 AD The Early 2000s Was a Simpler Time for TV but not analytics. The interview came from work I was doing looking at advertising optimization. We were doing early work into slicing our analysis by the viewer's time. Advertising and commercial analytics tool sets were primitive. The company I was working with provided a broad range of outsourced marketing and advertising services from ecommerce (we had just launched the company's first platform), to direct mail - yes, postal mail, to outbound and inbound customer service and sales calls and marketing creative services. I had a million square feet of fulfillment and 600 or so call center seats. The systems were on different hardware platforms and my office was in a raised floor data center with server racks as my only view. Mid-range and PC platforms were everywhere. UNIX boxes, IBM mid-ranges and so on. Integration involved a lot of transfers of files, especially CSV and even some email and FTP. Mostly it involved us creating our first data warehouse by hand writing ETL and then using shockingly complex SQL statements to get at data we wanted. There was no meta data for most of the sources and we dealt with very different data standards used by our clients, their vendors and partners and the laundry list of agencies and data service companies we worked with to enrich or validate our data. Insights From The Big Data Dark Ages The point of this was mostly looking for usable patterns in the data. Once we found data we wanted to use we would then move some logic to code. The more we looked the more interesting stuff we found. It was real analytics and it certainly was Big Data although nobody had coined that term yet. One thing is wasn't was Artificial Intelligence or Machine Learning. We iterated our way through the analytics, usually coding and scripting until the data looked usable and then trying to figure out if we had found what we were looking for. Half the time it was difficult to know what to do with the insights and I never quite knew how much to trust it. Fast forward to today and the tools and, especially the code, have changed a lot. It certainly makes what we were doing look primitive, inefficient and superficial. Harnessing and accessing Big Data has improved dramatically, analytics tool sets can automatically generate insights it took us days or weeks to get at and code learns through iteration the way. We Have Learned But We Haven't Evolved Recently MIT Sloan published a story illustrating how AI will likely widen the growing gap between our ability to leverage analytics and Big Data to generate insights on the one hand and the ability of managers to do something useful with them on the other. We need solutions to address the fact that accelerating developments of all sorts are going to increasingly make people the weak link in an ever stronger chain. I think the skillsets of the manager of the future need to look a lot different or we risk dependency on systems that will lead to a lot of lost opportunity. Gatorade commissioned this machine for a commercial. It took 5000 hours to build and uses 2048 nozzles that release water with millisecond timing and strobe lights to create animation from the reflections. This is not special effects or CGI. Finance is a critical industry in the development and commercialization of AI trends in general and near-term machine learning applications specifically. JP Morgan's May report ‘Big Data and AI Strategies - Machine Learning and Alternative Data Approach to Investing' was intended to focus on technical applications of big data on investment analysis. But as this news story on efinancialcareers points out it is extremely valuable as a way to articulate the skills needed to execute the strategy.

Machine learning is a great technology that is moving really quickly. But the direction is also clear. I said before that every company needs to look at how artificial intelligence will be applied to their business. A half-joking point I made was that everyone's job becomes learning how to use AI to eliminate other people's jobs. Artificial intelligence isn't going away and regulation, ethic or ignoring the impact on the jobs market won't move us forward. This disruption is going to be more transformative than previous technological disruptions including smart phones, the internet, ecommerce or the PC revolution because it will destroy jobs at a much faster rate than it creates them and it will also destroy high paying, professional jobs as well. It is time to make it a national priority to decide how we handle it.

Human cloning has been the subject of plenty of science fiction but the process is extremely complex. It has become one of those generations is just a decade or so away....every decade. In my opinion this isn't where the action is. The applications are not that valuable and the costs, complexity and ethical roadblocks slow it every step of the way.

Gene manipulation has many more applications and is already ongoing. By reprogramming genes we can seemingly address an amazing range of conditions, ailments and maladies. Unlike cloning this has direct and extremely valuable commercial applications. So I suspect that while we watch and fret over cloning, gene manipulation will help us to build a better future. When someone is 100% right and still fails to win an argument decisively ask yourself why. The data is conclusive. Increases in greenhouse gas emissions are in fact making the earth warmer. The reason why there is such a concerted effort to deny, obfuscate and distract from the issues is in part because of Paul Ehrlich.

The worst thing to ever happen to the environmental movement and climate change in particular was Paul Ehrlich's The Population Bomb. Among other things he predicted there would be no UK in the year 2000; that mass starvation was unavoidable; India would be decimated and 60 million would starve in the US alone. Ehrlich wasn't alone, there were those who looked at some simple math and predicted the deaths of hundreds of millions or billions of people. The calculations were simple, multiple crop production by the available land to grow food and then extend population growth forward to a full-fledged 'population bomb' and a massive deficit in food production was inevitable. So of course this would lead to resource wars and worldwide famine. Meanwhile others, most importantly Norman Borlaug, created the green revolution and figured out how to feed MORE people with the available land. Those who bought into The Population Bomb fell into massive Malthusian logical fallacies and concluded that people were the problem. We had too many people. People were having too many babies. They expressed these concerns in the most outlandish of exaggerated claims. Eventually these chicken little were not only discredited, they became a go-to boogeyman for anyone who didn't like what they were told. I believe we can always make things better. Most of the major trends of the world from industrialization to trade to immigration to computerization to the internet and social media have made, on balance, the world a better, safer, healthier place. Consuming resources IS the goal. We should be able to have more people, using more electricity and water and eating more meat and so on without destroying the planet. No there is no path to making that work today. Greater resource consumption is directly connected to greater emissions. We just to think smarter about things. I believe we could make a lot more progress on climate change if those on the correct side of it would agree to focus on how to have our cake and eat it too. It is funny how that expression is meant to highlight sacrifice. Similarly, too much of the modern environmental movement focuses on slowing progress, making people poorer and less free. Is that really the best we can do? Climate change deniers see themselves as identifying this generation's Ehrlichs. Exaggerators who who can't be trusted. Ignore them and they will be proven wrong sooner or later. Meanwhile, some smart guy in a lab somewhere will probably solve the whole thing anyway, they assume. The biggest target are alarmist predictions. Denier sites are littered with quotes from the 1980s through An Inconvenient Truth, released over a decade ago that they say are littered with disproved doomsday scenarios. This week the world's first commercial carbon capture plant came online. It is 1000 times more efficient than photosynthesis at capturing carbon from the air. Climeworks, the startup that created the facility, doesn't want to be identified as saying this alone will solve the problem. Not even close. But the question is, can't we refocus the climate change discussion away arguments that we need fewer people, living poorer lives and consuming fewer resources. If you believe that together we can do amazing things then let's agree to focus on solutions not prohibition. This Harvard Business Review article shares some examples of how machine learning is being applied to optimizing business processes. The examples are good ones. 44% of US consumers prefer chatbots to humans. Customer relationships are improved by anticipating behavior and customizing strategies. It is being applied to screening and shortlisting job candidate applications or improving fraud detection and security systems.

The article is a light piece with these and just a few other examples. It doesn't speak to the patterns of these developments. AI is being used as a tool to optimize but it is also doing more and more things better than humans. The impact of AI is such that nearly every organization will need an AI strategy and just as they get one will realize the AI is not something that gets bolted on but is something that gets applied ubiquitous. In other words it becomes everyone's job to eliminate everyone else's job. This is not to argue that AI should (or could) be slowed or avoided. But it is different than mobile smart devices, social media, online commerce and payments, the internet or even the PC or communications revolutions. AI will eliminate jobs much faster than it will create new ones. The unintended consequences will be huge. Massive shifts in employment could give way to a dramatic overall reduction in the demand for all labor. Those earlier (and still ongoing) technical revolutions did a good job of creating wealth and do create certain types of jobs. Most of my associates and I built careers on that foundation. But AI is not a tool like the others. It is a better way of thinking. Let's hope someone build an AI that can figure this out for us.  Bill Gates and now Mark Zuckerberg have used public forums to talk about the coming seismic shift in jobs that learning machines and automation will have. But I worry what they are actually doing is softening the message for their audiences. Zuckerberg focused in his Harvard commencement address on the impact on low end jobs. Gates sounded his trademark optimistic tone about the future. While I agree that the stats don't lie and we live in an unprecedented period of peace and declining poverty I worry that both of these tech leaders may be sparing their audience a painful message. Be wary of any conversation about AI and automation that focuses exclusively on self driving cars, warehouse workers and the like. We can deal with those because it implies there will be plenty of opportunity for high paying white collar workers. You can write the whole thing off as an overall positive element of development. From subsistence farmers to information workers in a few centuries. Affected workers can hope to get enough time out of their careers to put their kids through graduate school and look forward to their family moving up. Instead, what if Zuckerberg stood up at Harvard and said the real risk is to high paying jobs including architects, engineers, lawyers, accountants, managers, doctors and even computer programmers. Presumably, there were few long-haul truck drivers, current or future, in the audience at the Harvard commencement. What if the pair came out and said that programming jobs are at risk? How differently would their audiences respond? Our economy and society arguably depend upon a few agreed upon premises; social mobility, progress destroys jobs but creates better ones. People need work and will lower standards if forced to. If graduates with JDs cannot find jobs as lawyers and begin competing with business graduates for management or consulting jobs and those people compete with sales people for those jobs where does it end? You cannot run our economy on taxing billionaires alone, in spite of that tiny group's value in political campaigns. The top 1/8th of US households have incomes well above $100k a year. The majority of these people rely on wages, not capital gains or investment income. They are wage earners from dual income sales couples and programmers to dentists, lawyers, doctors and CEOs. Decimate this group and you will have real problems. Imagine the best educated parts of society competing for shrinking job opportunities. What if we create too few robotics engineering and automation design jobs to make up for this. This group represents the upwardly mobile hopes of millions of working class people. Drivers may accept that their job wont always be around so long as their kids can go to medical school. Without that hope and the critical tax base this population represents how can we hope to invest and innovate our way through the job disruptions? The Verge and others are reporting that Apple's big bets on AI is leading to product innovations sooner than you may expect. This includes their plans to add dedicated AI processing in future versions of the iPhone. The initial use of this is an important one. A lot of needed developments in smart phones are code intensive. This includes augmented reality, biometrics, simulations and advanced encryption technologies. This is not to mention the potential for sci-fi inspired leaps in automated assistants.

Tasks that are very code intensive are therefore processor intensive and that makes them power and therefore battery intensive. Add AI chips to iPhones and you reduce the processing power that needs to be dedicated to these tasks and make smaller batteries last longer. This is important because productizing AI technologies will drive massive increases in innovations. Profits, manufacturing scale, increased job creation and attraction of investment dollars are the fastest way to accelerate any field. Academic, government and venture investment are important too but the drive they provide is very different. When the field gets pushed forward by the economic tsunamis that products like the iPhone create it will lead to more rapid advances on the other end of the computing spectrum. Today it can take super computers up to a year to run certain simulations. Quantum chromodynamics is the theory of strong interactions and relates to model of nuclear forces. Astrophysical and cosmological dark matter simulations can take months. So when you buy future iPhones you may be powering the forces that will unlock our understanding of everything from atomic processes to the very structure of the universe. Last November Microsoft and the University of Cambridge published a paper about how its AI was writing code. The process includes scanning bits of code and considering lots of possibilities for how bits of code could fit together to create the right solution. This was falsely reported as Microsoft AI plagiarizing existing programs. That says something interesting about us and about what learning really is.

We all accept that technological change destroys some jobs and creates new ones. We remain excited about the process because, in general, the newly created jobs tend to be good ones and the innovation lead to positive things for society. As bad as factories were, the small-scale farming people left behind had its issues too. The personal computer revolution created entirely new categories of white collar jobs in the tens of millions. Because of advances in tools and training, it became easier to become a professional computer programmer even without a college degree in computer science. My grandfather turned wrenches, my father was the first in our family to work in an office and I work with computers. One worry about the AI revolution is what if this one doesn’t create new jobs like the past revolutions did? When you conduct media interviews you get a peak into the process of creating stories. I have seen how journalists find new ideas for new stories in academic and other original sources of news and report on them in industry press. If they are interesting enough then reporters in mainstream press will pick it up. It is often a lot of steps from medical journal to the table in your dentist’s waiting room. It is a complicated process whereby journalists learn about a topic and then simplify it and make it accessible and interesting to their audience. Some reporters do it very well but others get parts wrong. Much like a game of telegraph (or telephone?) reporters will find story ideas from other reporters, often without reading the original source materials. When a reporter misinterprets some coverage and drives an engaging conclusion that can be picked up by another journalist in another story, and so on and so on. The process of how stories are generated reflects a lot about how humans learn and how original works are created. Even the most novel innovation contains some derivative parts. Solving new problems means building upon what is done before. This is natural and the line between this and plagiarism boils down to now much originality has been added to what already existed. One of the current fears of AI is that it will replace us in our jobs. One intellectual life preserver for many people in tech is that programming is a creative job that reflects how differently we think than AI ever possibly could. This argument boils down to the view of AI as a tool for automation and not one for actual creation. So if AI steals that means it isn’t creating and programming jobs along with whole categories of jobs are safe. The particularly scary scenario is that AI creates itself and destroys some jobs but does not create new types of jobs. But, AI is already starting to create AI such as this experiment at Google Brain. It is also predicting cancer better than doctors, fighting parking tickets, creating art and stories and yes, writing code. So one of the first things we need to do to prepare for the full impact of AI is to rethink how we think about creativity and learning. Decide now how you feel about AI powered drones autonomously patrolling the skies. Imagine them learning from successes and failures and using new experiences to make decisions about how to apply rules of engagement to potential to targets below. Drones have been one of the most controversial new developments in warfare. Your view about a drone in Afghanistan being operated by a military person sitting down the street from a suburban Red Lobster in America is something of a litmus for your views on the use of the military writ large. But autonomous flying vehicles are a separate issue altogether. A Carnegie Mellon Robotics Institute professor recently let a drone learn how to fly indoors by letting it crash over 11000 times in 20 difference indoor spaces. Watch the video for more. One of the big questions regarding the future impact and the likelihood of AI doing things we don't want it to is the extent to which its abilities can be extended. I understand the argument that people are prone to making decisions we don't like too. But that alone is not an argument for treading very carefully here.

Read more in this Digital Trends article and tell me what you think. Microsoft CEO Satya Nadella Keynote at the Microsoft Build event on May 8th 2017 raises important questions about the role that the tech industry has in making sure AI doesn't generate deeply damaging unintended consequences but it also skirts the impact of intended consequences. It also highlights a scary truth, AI is long out of the academic research projects and in the hands of real product development teams. Satya says something I have not seen before. He points out that the future impact of AI depends upon the decisions of individual developers, program managers, business analysts, etc. who make the actual product design decisions behind the software that we use. In my time as a manager in Microsoft's product development organization in Redmond I saw the enormous scale of these software projects. Some are huge but many are small to medium sized teams with autonomy to do really whatever they want. I oversimplify a lot but the important point is that software isn't really a part of some large plan, it is the sum of huge number of individual decisions made by lots of people.

This is a real issue and the big AI houses - including Microsoft, Facebook, Apple, Amazon, IBM - should address this with internal standards and safeguards. Individual responsibility is crucial and creating a culture that cares about the impact of AI and not just the advancement and application of it is crucial as well. The unintended consequences of AI gone wrong are troubling enough. But we need to really start addressing the intended ones. At the same presentation Satya spoke about the impact on blue collar jobs but that isn't the end of it all. the destruction of millions of driver and warehouse jobs is not the really big problems to solve (although the impact will be huge) but the what happens when large numbers of very broad ranges of high-paying information age and white collar jobs including lawyers, doctors, IT, programming, accounting are made unnecessary but not replaced by new categories of jobs as quickly. I don't think that AI is going to kill us. I think for starters there are too many smart people worried about that and obvious disincentives in creating a self learning system with that potential. I understand the argument that creating AI is all about creating systems that can develop beyond its programming. The road to killing us is long and filled with enough mistakes that even an arguably fast maturing singularity won't get there.

What I do worry about is the social impact of the effect on the global economy of AI. I am in the camp that believes that few jobs cannot ultimately be eliminated. That won't happen overnight and by then we will have had to deal with a major overhaul of employment as a concept. The issue for the next few decades is that most jobs will be dramatically streamlined with major efficiency improvements. This impacted most fields but we survived that in the big PC, network and internet revolutions from the 1970s to today because it lowered effective costs and these revolutions created entire new categories of jobs, especially high paying ones with trickle down benefits and opportunity. These results didn't trickle all the way and too many people couldn't make the transition leading to a rise in low pay services jobs and underemployment at the same time companies struggled to source enough new tech workers. AI and Robotics will accelerate with advances from quantum computing. Then, paired with improved technologies in VR and IoT, this will reduce the demand for jobs greater than it creates new ones. Take legal for example, AI doesn't have to replace lawyers but if it streamlines contracts and advisory services enough as a tool for lawyers than the demand for lawyers is reduced. More formulaic things will automate completely as evidenced by a Stanford student automating traffic ticket defenses. Lawyers are smart but those skills are only so transferable. That makes the supply of legal services relatively inelastic. When the same thing is happening in computer hardware and software, medicine and other major white collar and information age fields, where do these people go? In this blog I am going to explore these issues and also touch on emerging thinking on how we address these things. This includes the periodic waves of attempts to suppress it like regulations or protectionism but will focus more on solution like reinventing education and guaranteed minimum income solutions. |

AuthorMichael Zammuto is the CEO of Completed.com and Cloud Commerce and a strategic adviser to several startups. Mike's background is in SaaS services, B2C sites and B2B firms and has worked extensively in online reputation, digital marketing and branding. Archives

October 2019

Categories |

RSS Feed

RSS Feed